Lambda Python Docker Handler Gotcha

Here is a little tip on a gotcha when using AWS lambda with a python handler in a docker image.

First a quick review on how lambda+docker+python should work:

- AWS provides a base docker image for lambda+python: https://docs.aws.amazon.com/lambda/latest/dg/python-image.html#python-image-base

- These base images expect the python application to be installed within

/var/taskin the container - These base images also expect the python dependencies to be installed within

/var/taskas well. This can be achieved by usingpip install --target /var/task - Lambda expects a handler name in the form of a python module path. For example, an application installed at

/var/task/app.pywith a functionhandler()has the module pathapp.handler.

If the docker container is a web application it can be desirable to hot reload changes to the webapp when testing locally. One common way to implement hot reloading is to volume mount the source code from the host machine into the docker container. Any changes to the source code on the host are automatically propegated to the running docker container. The volume mount can simply be done at runtime: docker run -v /home/elias/my_webapp:/var/task/my_webapp.

Astute readers may notice that the above mount command mounts the source code to /var/task/my_webapp instead of /var/task. Why? If the application was mounted to /var/task the python dependencies installed with pip would be overwritten by the volume mount. To get around this we can move the application to /var/task/my_webapp and only overwrite that directory with the source code directory from the host.

After moving the application, the lambda handler module path has to be updated from app.handler to my_webapp.app.handler.

Now for the tip: if the application is deployed under /var/task/my_webapp there is a chance that lambda will throw an error saying that the handler cannot be imported. It took me about a day of troubleshooting, but it turns out that lambda cannot import the handler from a sub-directory unless there are __init__.py files in all of the subdirectories of the application. After creating __init__.py files within all subdirectories everything works as expected.

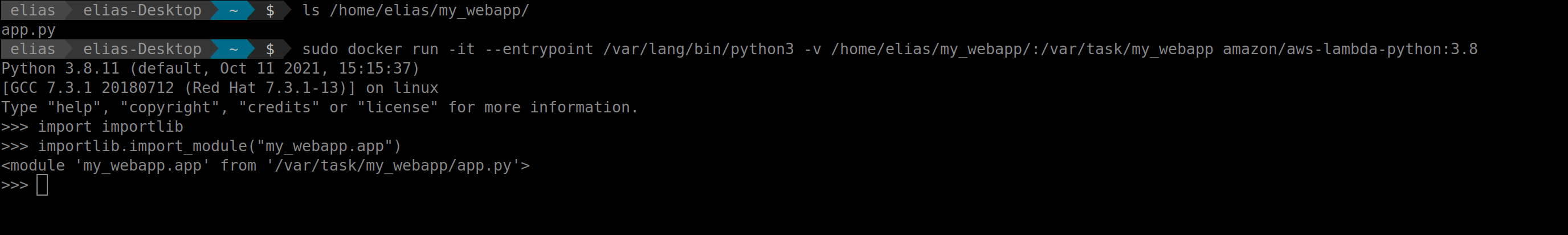

In theory since python 3.3 and later __init__.py files should not be required to import packages: PEP 420. I searched through the source code for the open source python lambda runtime and found that importlib is used to import the handler as seen here. I tried running the exact commands used within the opensource lambda python runtime and I was able to import apps within subdirectories without the __init__.py files – as PEP 420 allows. My best guess is the non-opensource lambda runtime is using an optimized C/C++ library for performance reasons which does not cleanly handle namespace packages as defined within PEP 420. Instead of going further down the rabbit hole it is just easier to create the init files and call it a day.

Screenshot: importing the app works without the init files when using the open source lambda runtime?